Here is an ASCiinema screencast (which you also can download and re-play locally in your Linux/MacOSX/Unix terminal with the help of the asciinema command line tool), starring tabula-extractor: TabulaPDF and Tabula-Extractor are really, really cool for jobs like this! It even got these lines on the last page, 293, right: nabi,"nabi Big Tab HD\xe2\x84\xa2 20""",DMTAB-NV20A,DMTAB-NV20A

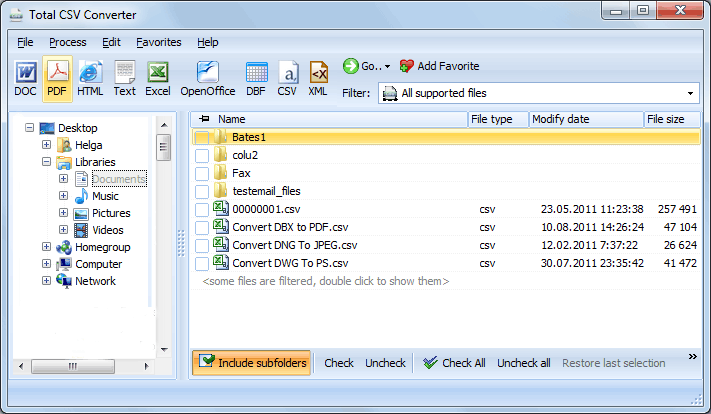

ALTERNATIVE TO PDF2CSV CONVERT PDF

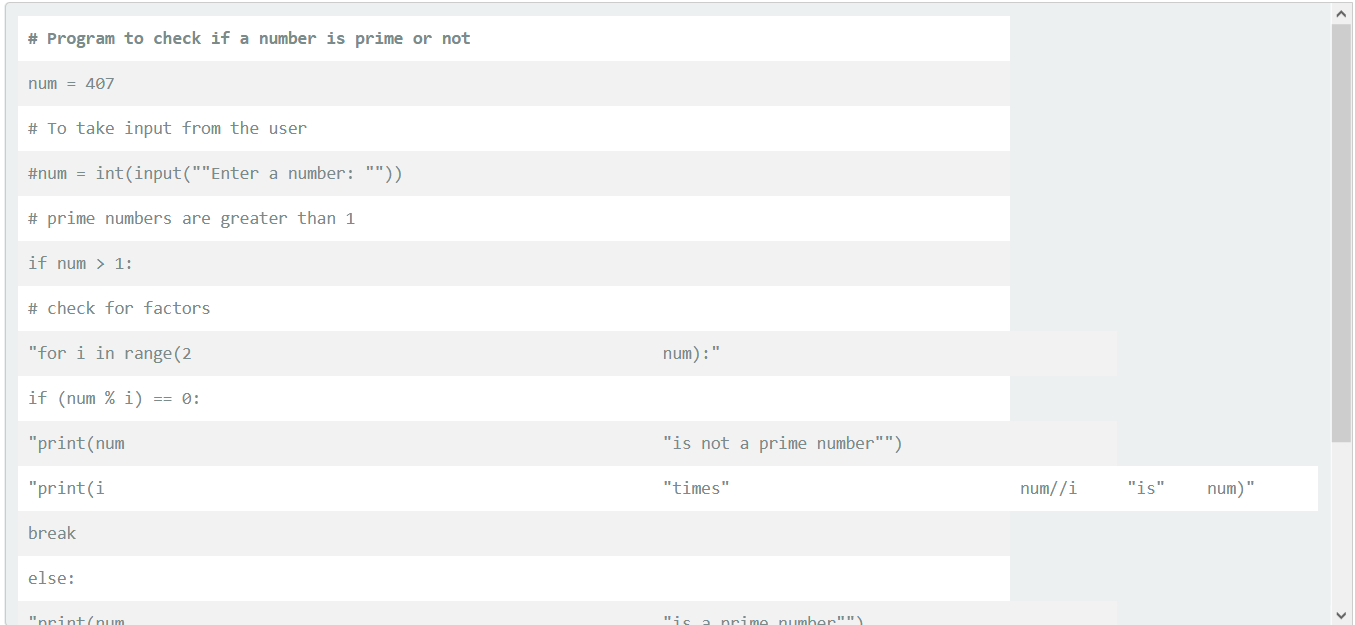

Which in the original PDF look like this: Retail Branding,Marketing Name,Device,ModelĪ.O.I. The first ten (out of a total of 8727) lines of the CVS look like this: $ head DAC06E7D1302B790429AF6E84696FCFAB20B.csv To extract all the tables from all pages and convert them to a single CSV file. tabula ~/bin/ is in my $PATH, I just run $ tabulaextr -pages all \

I wrote myself a pretty simple wrapper script like this: $ cat ~/bin/tabulaextrĬd $/svn-stuff/git.tabula-extractor/bin I myself am using the direct GitHub checkout: $ cd $HOME mkdir svn-stuff cd svn-stuff Here the not-so-well-known, but pretty cool Free and OpenSource Software Tabula-Extractor is the best choice. While in this case the pdftotext method works with reasonable effort, there may be cases where not each page has the same column widths (as your rather benign PDF shows).

0 kommentar(er)

0 kommentar(er)